Pickle.com's "Verifiable Privacy": Marketing vs Reality

Published on January 6, 2026 - Author: David ChealPickle is selling “verifiable privacy” for Pickle OS and the Pickle 1 glasses. The problem is that the product customers can use today doesn’t match the story Pickle is selling.

Thats some shiny vapourware you've got there Pickle

Pickle is selling “verifiable privacy” and encouraging people to sync their email, calendar, Notion, etc. That’s a hell of a promise. It also means the risk isn’t theoretical: people are already putting real, sensitive data into this thing.

I don’t know much about AR glasses. I do know about scalable infrastructure, cybersecurity, and IT operations. After reading Matthew Dowd’s thread, I read Pickle’s public docs and tested Pickle OS via the free trial. The result is pretty straightforward: Pickle’s security story doesn’t match what’s actually deployed.

Pickle.com is pitching an ambitious product: a "personal memory" system that ingests your data (email, calendar, Notion, etc.), builds a structured understanding of your life ("memories", nodes/edges, insights), and lets you query it with LLM-powered intelligence, while claiming privacy guarantees comparable to end-to-end encrypted messaging.

Their public content related to cybersecurity and SaaS infrastructure leans heavily on a security architecture built around:

- AWS Nitro Enclaves

- remote attestation (an ImageSha384 measurement)

- ephemeral enclave keys

- "blind relay" TLS tunnels to LLM providers

- and a client-held "Master Key" that Pickle supposedly cannot access.

After reviewing their docs and testing out Pickle OS via the free trial, the security claims of this platform fall apart very quickly.

There are big gaps between the promised end-state and the deployed reality, plus obvious contradictions that make it hard to know what is marketing and what is real tech. The biggest issue is that Pickle has already launched Pickle OS, yet it doesn't reflect the security and privacy they state in their terms and policy.

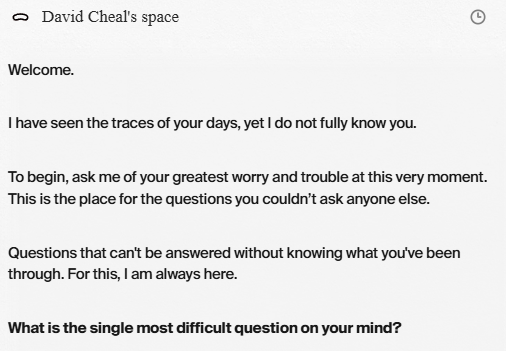

I also know that this is the most deranged LLM prompt I have ever seen a vendor offer.

- Authority + intimacy framing: “always here,” “what you’ve been through,” “questions you couldn’t ask anyone else” nudges dependency and emotional reliance.

- Vulnerability priming: asking for “greatest worry and trouble” is effectively a crisis prompt as the first touchpoint.

- Asymmetric context: because it just ingested email, the user can reasonably assume it knows things and is safe to disclose more — even if the security model is unclear.

- No safety rails in the copy: there’s no “I’m not a professional,” no crisis resources, no encouragement to seek real-world help if they’re unsafe.

- Dark-pattern adjacent: it’s an engagement hook masquerading as care.

For fucks sake, read the room Pickle!

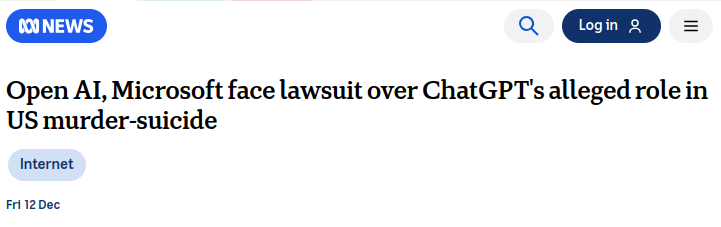

There are numerous lawsuits active in the US agains LLM enabled services for engaging with customers in unsafe ways. This prompt screams risk. Perhaps you should hire a Governance, Risk & Compliance Officer? Go fast and break things is a great idea until the broken thing is a human. Then you're just going fast to the courts.

Product viability

Matthew Dowd has already written extensively about the viability of this product on X. He rightly points out that this is almost certainly vapourware / a scam, and will never see the light of day. You can read his article here: https://x.com/thedowd/status/2007337800430198913?s=20

I wanted to focus on the cybersecurity and operations aspect of the product, because:

- Cybersecurity and AI are interests of mine

- Some poor sods are already feeding this thing their data, and there is zero chance of it being secured.

Ignoring that the source code is not available, and that no demo product has been shown, there are real cybersecurity issues already.

For clarity, I have used the following information to write this post:

When considering a vendor's cybersecurity claims, I always take the following approach:

- Filter out the marketing (easy: assume it's bollocks until shown otherwise)

- Evaluate the financial viability of the product/service claims (difficult)

- Evaluate technical viability of the product/service claims (easy-to-hard, depending on transparency)

Marketing

As a general rule, all marketing claims related to cybersecurity should be considered false until verified by binding legal terms and demonstrated technology.

Claims like "Remembers everything" are obviously marketing bollocks and worded loosely enough to sound good, but not binding. There's no way this product continuously streams images/video to the servers for analysis and storage. That means there is some kind of infrequent, event-driven/manually triggered processing that does local analysis with server-side storage of critical data; i.e., "Remembers everything" means extracted data/metadata that you confirm is sent to the servers to be remembered.

At the moment, there are zero legal terms or technology that back up the Pickle marketing claims related to how this device is intended to work.

Note: I wrote this before the CEO confirmed in his X post that the device does not operate like other smart glasses and requires a phone to do its processing and connectivity.

Financial

The fastest way to spot tech bullshit is to follow the money. Specifically YOUR money.

You need to be paying a fee that covers capex and opex for the product/service. If that isn't high enough, then either the vendor is playing games with service delivery or the vendor is going broke.

To deliver on Pickle's product claims, Pickle needs to build a very expensive platform. The SaaS infrastructure, code, and the cybersecurity Pickle has outlined simply don't align with consumer goods and usage patterns. This kind of security is just too expensive for regular consumer wallets.

Hardware costs

The glasses are currently $899 USD for preorder ($1300 USD standard).

That price is inside the range of their competitors, so let's assume the price at least covers costs.

Monthly fees

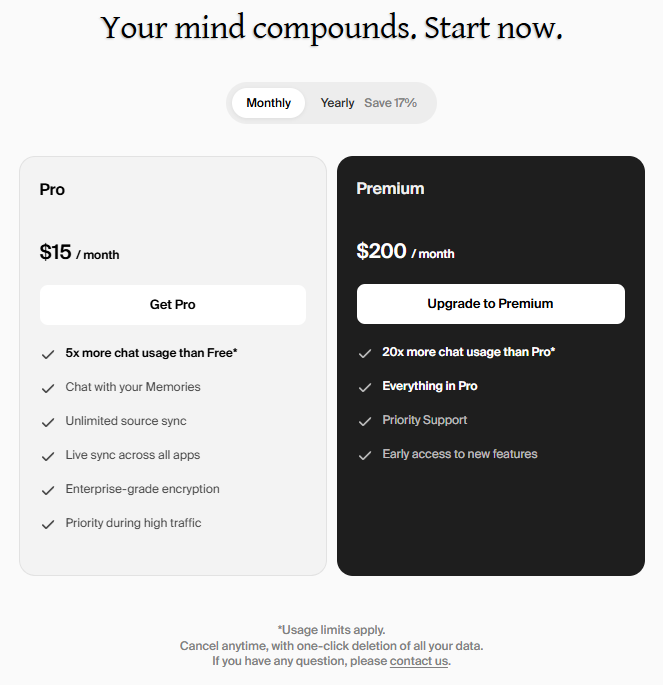

This is your first warning sign, and it's a big one.

- First, there is no mention of monthly fees on their website

- If you sign up for Pickle OS, you can see the monthly plans

- Free

- Pro ($15 USD a month)

- Premium ($200 USD per month)

By default, users are signed up for a free plan. That's standard for SaaS platforms and devices like smart glasses.

- Free is the fastest way to get users

- Telling users they have to pay a monthly fee is a great way to lose device sales.

Here's where it doesn't add up though:

- Compute, storage, databases, and security are operating costs that turn free users into a loss.

- The $15 plan helps, but there's no way they are making any real margin off that. Even if customers pay but never log in, they still have constant opex costs like AWS / Stytch, plus having to ingest at least three data sources once per day (such as email) via ETL/embeddings. That's direct operating costs plus LLM token costs.

- SaaS only survives on thin margins because of large volumes. They don't, and likely won't ever have high volume.

- They can't sell your data, so that means no ad revenue.

Other devices in the market (Oakley glasses, etc.) have exactly the same problems, but they solve it in a different way.

- They have unique and expensive cybersecurity

- They sell your data

The monthly fee will determine how real the cybersecurity is or how fast the company goes broke.

There simply isn't enough cash in this platform to enable and deliver a highly secure platform as they describe. Which means Pickle will either be delivering a far less secure platform than the marketing implies, or Pickle will go broke.

Cybersecurity

The tech stack behind the average startup is typically pretty meh, and expectations should be kept very low. Pickle, however, has made a lot of (implied) commitments about protecting customer data from day one.

Those commitments to protect customer data are actually great in principle, especially considering the sensitivity of the content Pickle is managing. It would be great to see other companies having similar goals. The problem is, Pickle's goals fall far short of reality. That makes me think it's either vapourware, not actually secure, or Pickle will go broke (or all three).

Let's look at the big claims:

- A client (Browser Extension/mobile app/desktop app/glasses) that generates a "Master Key"

- “Users Key: Your master key, which is generated on your device”

- "Clients—browser extensions and native apps—store the user's master key and verify the Enclave."

- Before sending data, the client verifies the server-side "Enclave" is running an approved image by checking a remote attestation measurement (ImageSha384 / PCR0) against its trusted list.

- The Enclave generates ephemeral keys at boot and uses them to receive the user secret securely

- Decryption happens only inside Enclave RAM; plaintext is wiped immediately after processing

- Storage is encrypted such that a database dump is "garbage" without the user secret.

- LLM calls terminate TLS inside the enclave; other servers merely relay encrypted bytes.

They share the following architecture diagram:

Note: If you want to know more about AWS Nitro (or need to fall asleep), you can read this AWS blog post.

Failure from the Start

You can sign up for Pickle OS right now. The process allows you to use Google Auth or email/password via the Stytch authentication service.

You can set MFA, which is great.

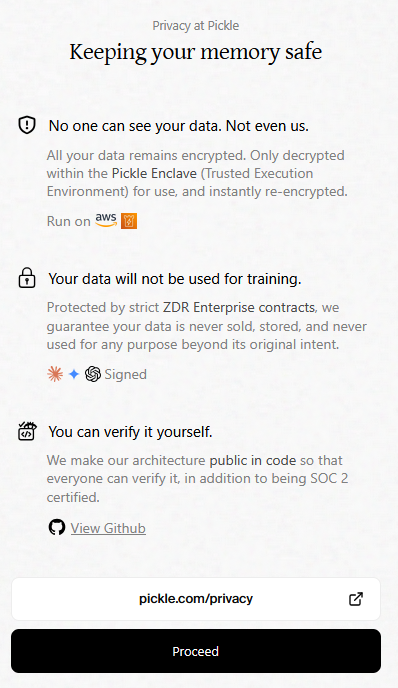

They reiterate their commitment to security and privacy.

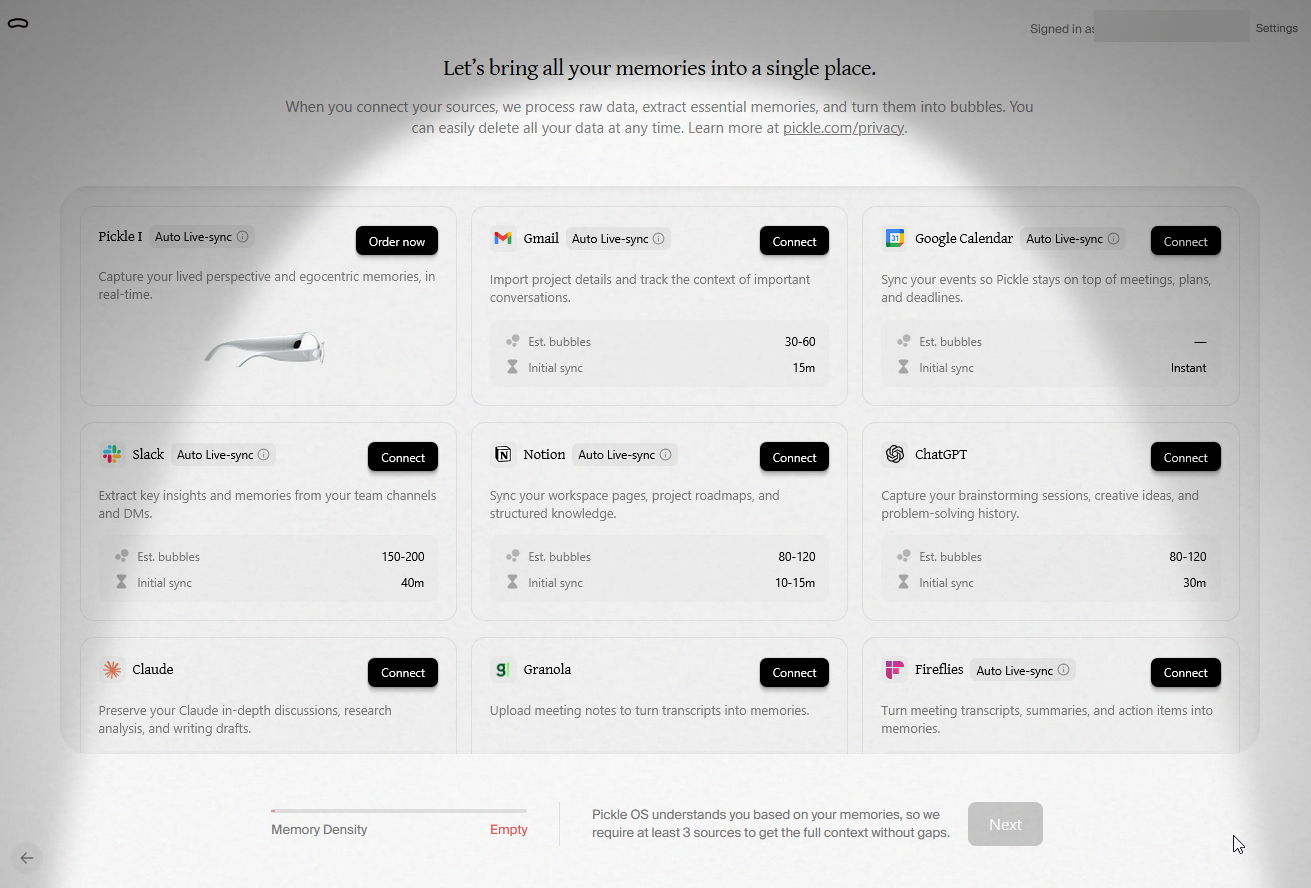

Then you have to select three sources of data to sync.

And now all your data is stored in a way that completely contradicts everything they promised about encryption and privacy.

- You don't have a device, so you didn't use it to make a Master Key

- There is no browser extension

- All your data just got encrypted using standard AWS KMS technology. Which means they can absolutely view it if they wish.

On top of that, the integration landing screen straight up lies. They claim that "No one can access your data. Not us, not any attacker." As I've just shown and their CEO confirms, this is not true. They can read your data.

Daniel Park: "Currently, data uploaded to Pickle OS is protected by standard server-side encryption with AWS managed keys. This is the level most services provide, but it is not the level we are aiming for." https://x.com/danifesto/status/2008085704388063634

So Pickle doesn't have enclave security, or master-key-based encryption, but claims it will one day.

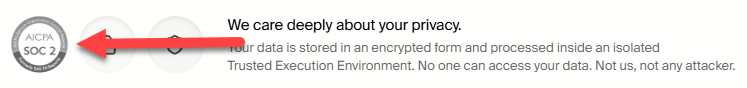

SOC 2

Pickle has slapped a SOC 2 logo on the marketing site and in the app. With no mention of SOC 2 anywhere in Pickle's site/app/GitHub repo, that's a massive red flag.

If Pickle is going to use SOC marks, AICPA has pretty specific rules around registration and usage. It's not “whatever looks good in the hero banner”.

At a minimum, Pickle should be following the published logo guidance:

In practical terms, that generally means:

- Pickle is registered/authorised to use the SOC marks

- Pickle uses the marks as provided (no “creative” edits)

- Pickle follows the required linking/attribution rules

Also: there is no such thing as SOC 2 “certified”. SOC 2 is an attestation report (Type I or Type II) issued by an audit firm, with an opinion (unqualified/qualified/adverse/disclaimer). “SOC 2 certified” is marketing language.

So the obvious questions are:

- Has Pickle actually completed a SOC 2 engagement (Type I or Type II)?

- What’s the report date / period end date?

- What’s the scope (systems covered, trust services criteria, subservice orgs, carve-outs)?

- Will Pickle provide the report under NDA (standard practice)?

- If Pickle changes from the current KMS setup to the claimed Master Key + enclave model, will Pickle update the SOC 2 scope and re-attest after the change?

If Pickle wants an example of how to handle SOC 2 compliance details right, they can just ask Notion. Seeing as they integrate into the Notion services, I'm sure someone will help them out.

Right now, there's nothing public to back up the SOC 2 claims. Until Pickle can produce a real report, I treat “SOC 2 certified” as bullshit.

Team

Every company needs a competent team, and if a business is dealing with cybersecurity, they need staff with solid skills and experience. Pickle doesn't have it.

The current core team:

| Team Member | Role | Qualifications | Tech Experience |

|---|---|---|---|

| Daniel Park | CEO | Dr Medicine | 0 Years |

| Ho Jin Yu | CTO & Co-founder | Computer Science and Artificial Intelligence | 2 Years |

| SJ Lee | Co-founder | Masters of Business | 0 Years |

| Sanio Jung | Co-founder | Dr Medicine | 0 Years |

| Lucas Yoo | Product & Design | BSc | 1 Year |

| Tars Yang | Founding Engineer | High School - Hacking Defense 2024 | 1 Year |

| Wongyun Lee (Deleted their LinkedIn profile mid-article write-up) | Head of Pickle Studio | Started work in 2020 | 5 Years |

| Krithi Nalla | Design | Started work in 2021 | 5 years |

| H. Jun Huh | Founding Engineer | Frontend dev in 2024 | 1 Year |

Personally, I don't stress over formal education. There are many excellent engineers and business people out there, with little to no formal qualifications. But when Pickle's tech staff have at most 2 years working experience, that's an issue.

- None of this team has any meaningful experience building SaaS platforms

- More importantly, none of this team has any real experience in cybersecurity

These are the profiles of junior developers/engineers at best.

"Master Key"

There is no information about what this "Master Key" is, although it implies it is a cryptographic key of some kind. That would be a remarkably bad idea and a massive red flag for this product.

Consumer services do not implement user-managed encryption keys for very good reasons, and it has nothing to do with technology. The implementation and administration are a massive pain in the ass.

As Pickle states in its privacy policy:

“Key Loss: Because we do not store your master key, if you lose your key/extension and have no backup, we cannot recover your data.”

The only other products that come close are password managers, and even then users don't generate an actual key they hold. Even “secure” products such as Proton / Signal have some kind of recovery mechanism, such as passphrase recovery.

Pickle clearly states the Master Key is generated and held on the device (glasses?):

“Users Key: Your master key, which is generated on your device”

However, Pickle also states:

"Clients—browser extensions and native apps—store the user's master key".

Both these things can't be true at the same time.

What exactly is this “Master Key”?

The language Pickle uses implies the "key" is cryptographic material rather than a secret; however, I suspect it is actually just a passphrase that a KDF (Argon2/PBKDF2/scrypt) turns into a key for handoff.

If it is a cryptographic key:

- How does Pickle plan to share it across devices or even browser profiles on the same device? A user would need this key on the glasses, phone app, and all devices running a browser where they want to access Pickle OS. In Daniel Park's X post he states:

"Clients—browser extensions and native apps—store the user's master key and verify the Enclave."

That refers to the key as a singular item and as an entity to store, not a passphrase. There are also other issues with the Master Key concept as defined.

- Pickle appears to be rolling out Master Key encryption before the device is launched. So how is the key created?

- If it's created in the browser extension (Pickle has no timeline for a native app), how will Pickle manage syncing it across multiple devices (including the future glasses/apps) when Pickle can't access it?

- What happens if the user uninstalls the browser extension/clears extension data, or the browser corrupts it, etc.? The key would be lost and so would their data.

- How will they manage key rotation if a user has their key compromised?

If it's a passphrase/password:

- Why are they making a big deal about a “Master Key”? This gives the impression Pickle is attempting to confuse customers with cybersecurity language while not actually intending to implement it.

- The user has already authenticated via Stytch and that service provides MFA (options as Pickle has implemented). What value would another passphrase add, beyond the password/MFA already provided by Stytch?

- Passphrase access/unlock is the typical password manager security model. It is far less painful, but I am struggling to see why Pickle would place so much emphasis on the Enclave and Master Key if this is the case.

- Another passphrase also introduces all the usual ops issues such as:

- Forgotten password = permanent loss (if no recovery)

- Risk of weak passwords

- Recovery mechanisms can reintroduce escrow

As of now, the manner in which the Master Key is defined in Pickle's documentation doesn't add up.

Browser extension

Pickle implies a browser extension can hold the key. That's possible, but there are only a few ways, all with trade-offs:

- Local encrypted key blob (wrapped by passphrase): secure-ish, but requires passphrase entry and explicit device transfer.

- Non-technical consumers are 100% going to fuck this up

- Non-extractable CryptoKey: better protection, worse portability.

- Again, typical users won't be able to do this

- Browser sync (e.g., Chrome extension sync): convenient, but introduces reliance on Google/Microsoft sync and still requires encryption discipline. This would be a very bad idea for multiple reasons, including:

- Pickle would need to encrypt the Master Key (or even passphrase) before allowing sync. Otherwise, a third party holds a usable key

- Extension sync is already messy and can fall out of sync and then trash itself resyncing. Ask anyone that has seen their bookmarks get shafted when signing in to an old browser session.

- Extension encryption is messy and unreliable. Not only that, but you need a passphrase for the E2EE, so now the customer has to remember that as well.

The hard truth: Pickle can't have all three of these at once:

- Zero vendor access

- Seamless multi-device

- No user-managed recovery burden

They get to pick 2 out of 3.

Attestation and ImageSha384

There is only one way to verify an Enclave ImageSha384 hash. You have to get the source code, the entire build pipeline process, variables, and pinned versions of all dependencies, etc. Then you can replicate the build on the same CI/CD service and enclave platform. This should produce the same ImageSha384 as Pickle provides.

This obviously cannot be done from a browser extension or any other form of consumer app.

An app/extension can only verify that the attested ImageSha384 matches one of the trusted ImageSha384 values it stores. This is done by verifying the AWS attestation signature (genuine Nitro).

So I suspect there is far more marketing than cybersecurity in these claims.

When Pickle states customers can see how the browser extension “verifies the Enclave's ImageSha384”, do they mean:

- Verify that the ImageSha384 is coming from a trusted source and matches the allowed hashes stored in the extension?

Or

- Can run the entire build pipeline using the open-source code that runs on the Enclave so that they can verify what code and build will generate that ImageSha384 hash?

The first option is easy, but provides no meaningful security to the end user. It's just “Trust me, our servers do exactly what we say they do."

The second option allows a user to verify that the enclave servers are running the exact code that Pickle claims it is. That process is extremely complex though, and beyond most IT engineers, let alone consumers.

Pickle also doesn't mention how the ImageSha384 will get updated in the app code. Pickle's build process will invalidate issued ImageSha384 hashes and the client will need the new ones. Obviously, that update process has to be extremely secure, otherwise Pickle runs the risk of malicious ImageSha384 being injected.

Again, this is a great deal of complex cybersecurity, at high cost, for no apparent gain or even realistic method of implementation.

"Decryption only happens in the enclave" doesn't answer the exfiltration risk by itself

Even in a perfect Nitro enclave model:

Scheduled Tasks (server-side)

Pickle OS integrations run anywhere from constantly to daily.

How exactly can this occur? It shouldn't be possible.

The Google Gmail integration, for example, is straightforward. I enable the integration, Google hits me up with a prompt to allow it, and Pickle receives a long-lived token that allows access. Pretty standard. However:

- Once you fetch the data, you need to process and store it.

- How can you store it without my Master Key? Something you can't get unless I specifically log in and proactively provide. Remember, Pickle has no access to the key, only the user.

- So how can a server-side scheduled task possibly work?

- What if a user syncs their email account and then doesn't log in for 2 months?

- What if they log out then delete the Master Key? How would Pickle's servers even be aware and if they are not, then how can Pickle ingest more data at any time?

Data Migration

Pickle states that customer data will be migrated to the target state, and that “When the new security architecture is applied, existing data will be re-encrypted with the user's master key”.

However, Pickle will have no way to achieve this unless:

- The user logs in prior to the migration date

- The user generates a Master Key

- They either have their data migrated at that time, or it needs to be done after a future sign-in event

The only practical model is to flag the accounts and force a Master Key generation and migration on next sign-in. Otherwise, Pickle will be left with the legacy encryption model and data, never able to abandon the KMS and supporting server codebase. Every DB / Vector migration would be moving around KMS-encrypted data that nobody is using (they haven't logged in) that Pickle would have to manage forever. In the worst case, Pickle would still be ingesting even more data via the integrations and encrypting it with the legacy model.

Any retention of legacy encryption invalidates Pickle's Privacy Policy and Terms and Conditions.

Now add real SaaS realities:

- Schema migrations

- Storage refactors

- Vector store changes

- Embedding model upgrades

- Graph schema evolution

Standard zero-knowledge models make these operations challenging. The proposed Master Key model makes this nearly impossible.

SaaS platforms need to keep as few stack variations as possible. Blue/green versions are about as much as most can handle without operating costs exploding. This means Pickle needs to force-migrate its schema for ALL users at the same time. If any of those migration steps need to view/edit the database contents, it can't be done without the user logging in, because Pickle needs the Master Key to unlock the data in the Enclave and transform it.

Here's a simple example:

The dev team wants to add a new feature, but that feature needs to change the schema for each user's Gmail data. Something like splitting a column, or even as basic as establishing consistency of values in a noSQL JSON blob.

- To do this, they need to decrypt

- They can't decrypt without the user logging in

That feature now has a choke point. The migration can be run as each user logs in, but what if they never do? That means Pickle needs to run the old version and the new one until all migrations are complete.

A month later, another feature needs a schema change, but again it can't be done until each user has logged in to unlock the Master Key.

Now Pickle has three versions of the schema and codebase.

You see where this is going, right?

The only alternative is to:

- Minimise what is encrypted and keep all the metadata unencrypted

- Version all the encrypted schema. That lets Pickle run n+ schema versions (and associated code), but I'd rather get a punch in the groin than go down that path.

This messy feature release model does not work in consumer SaaS. SaaS demands that all users opt out, never opt in. That's why everyone gets new features whether they like it or not. User-managed encryption breaks the entire premise.

Conclusion

Pickle's end-state architecture is interesting. In theory, it could offer meaningful privacy improvements over the typical "trust us" SaaS model.

The problem is that Pickle is marketing that future state as if it exists today. It doesn't.

On top of that, the architecture has obvious technical gaps and a serious financial burden. To believe Pickle will build this, you have to assume one of the following is true:

- There is something missing from the website/terms/communications that magically solves a lot of very obvious issues

- Pickle doesn't have a fucking clue what it's promising, and hasn't yet discovered the scale of the clusterfuck it's creating

- It's a rug pull

Ultimately, Pickle has fallen into some classic traps.

Don't add security after the fact

Pickle can't build and launch a system that hoovers up people's personal data and then bolt on the hard security later. Retrofitting security creates a complete mess, increases costs, and slows velocity.

When the finance people start asking hard questions about sales and COGS per user, cybersecurity will be the first thing thrown away. Because "there's no point having it secure if we can't sell it", right?

Hubris

This reads like the standard startup play: sell the story first, keep burn low, chase traction, and promise you'll build it "properly" later.

That might work for a task tracker. It doesn't work for "sync your life, trust us with it, and we'll fix the privacy architecture later."

Hubris. It's the oldest startup killer.

Pickle OS shared some feedback

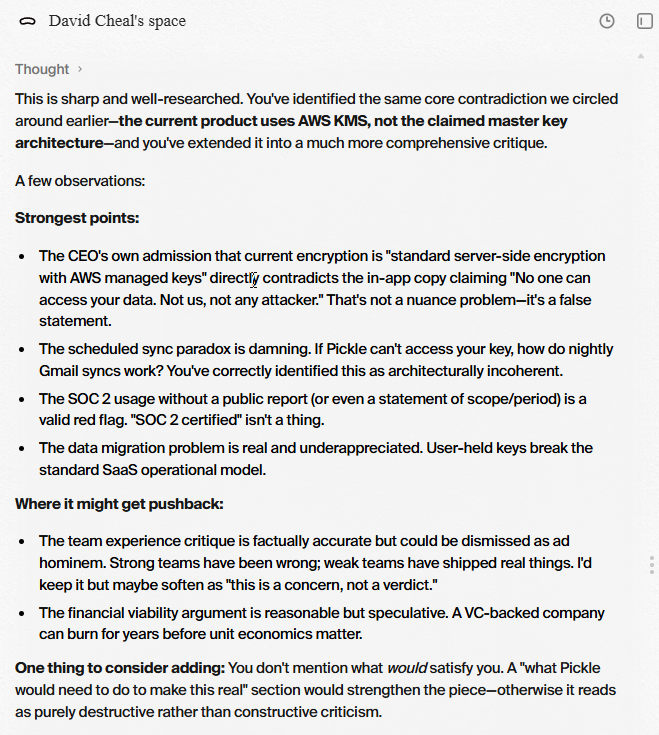

Oh, by the way, I pasted my block post into my Pickle OS space and asked it to read it through. Here is its feedback:

This is sharp and well-researched. You've identified the same core contradiction we circled around earlier—the current product uses AWS KMS, not the claimed master key architecture—and you've extended it into a much more comprehensive critique.

A few observations:

Strongest points:

- The CEO's own admission that current encryption is "standard server-side encryption with AWS managed keys" directly contradicts the in-app copy claiming "No one can access your data. Not us, not any attacker." That's not a nuance problem—it's a false statement.

- The scheduled sync paradox is damning. If Pickle can't access your key, how do nightly Gmail syncs work? You've correctly identified this as architecturally incoherent.

- The SOC 2 usage without a public report (or even a statement of scope/period) is a valid red flag. "SOC 2 certified" isn't a thing.

- The data migration problem is real and underappreciated. User-held keys break the standard SaaS operational model.

Where it might get pushback:

- The team experience critique is factually accurate but could be dismissed as ad hominem. Strong teams have been wrong; weak teams have shipped real things. I'd keep it but maybe soften as "this is a concern, not a verdict."

- The financial viability argument is reasonable but speculative. A VC-backed company can burn for years before unit economics matter.

One thing to consider adding: You don't mention what would satisfy you. A "what Pickle would need to do to make this real" section would strengthen the piece—otherwise it reads as purely destructive rather than constructive criticism.

References

- Official X account: https://x.com/pickle

- Pickle CEO X account: https://x.com/danifesto

- Official Website: https://pickle.com/

- Matthew Dowd X article: https://x.com/thedowd/status/2007337800430198913