Teachers wont be using AI for very long

Published on August 10, 2025 - Author: David ChealArtificial Intelligence is incredible — and it's going to screw us over.

Gather around kids, I want to tell you about how amazing Artificial Intelligence is, and how it will ultimately fuck us all over.

This will be a little tricky because I intend to talk to two groups that don't typically overlap. Educators and Software Developers, but stick with me. I'll try to keep the tech talk light.

Originally, I was going to make a LinkedIn post that showed teachers how to prompt AI for a lesson plan. I wanted to demonstrate that differing prompts, will get you very different results.

I'll still do that, but I pivoted to writing this. Teaching educators how to prompt, is missing the point of why AI even exists. In the end, I suspect individual teachers prompting AI for assistance will be a short-term phenomenon at best.

Old broken models with new shiny tool

As organisations and workers take up the use of AI, they are following established usage patterns. Ie, if you want everyone to create documents, you roll out a copy of MSWord to each person.

The trouble is, AI doesn't have to be used like that. The goal is not to give each person a tool, the goal is for the organisation to give AI each employee's work (or part thereof).

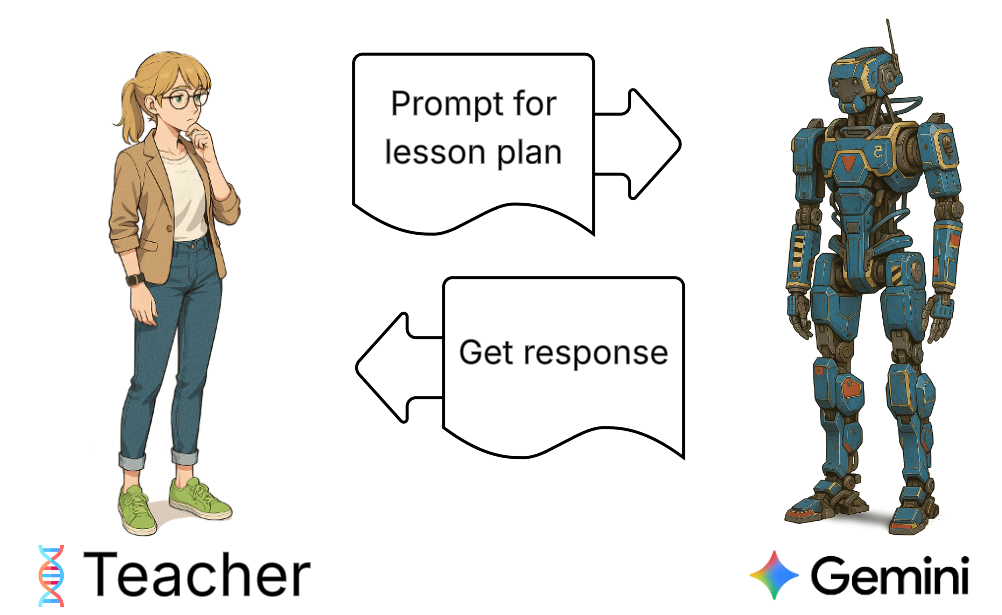

If we follow the old pattern, we get this:

The teacher is provided access to an AI, say Gemini, through Google Classroom. Each person is left to muddle it out, but they get some stuff done. Results are very mixed, and mostly poor because skills vary and there are no "experts" in the space to help or train. There is little to no collaboration.

Staff become frustrated and raise concerns about training, guidance and policy. There is a lot of FUD floating around. Especially in industries where they are used to following well established patterns. So organisations provide some vague policy (already dated) and basic training resources.

The solution actually makes things worse, because now you've got a lot of poorly trained teachers, each bashing away at Gemini trying to get the AI to output meaningful results. Even when it works, the AI is constantly changing faster than they can keep up.

Now you've just got a lot of scared and confused teachers asking the AI bad questions

Frustrations builds on both sides as teachers can't make the stupid thing work and the organisation isn't seeing it's promised benefits.

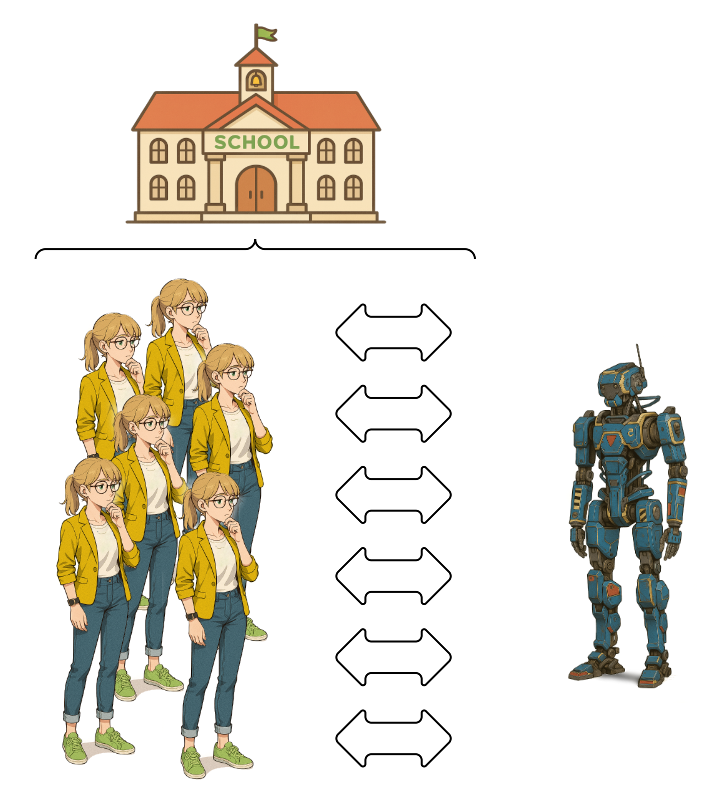

Pretty soon, management is going to do management stuff and decide that what they need is an expert. Someone in the school that can help the teachers use AI or act as an interface to the AI. They will get a bit more training and new title, but no increased pay.

External consultants call this a gatekeeper, and they are typically the first person you want fired in an efficiency drive.

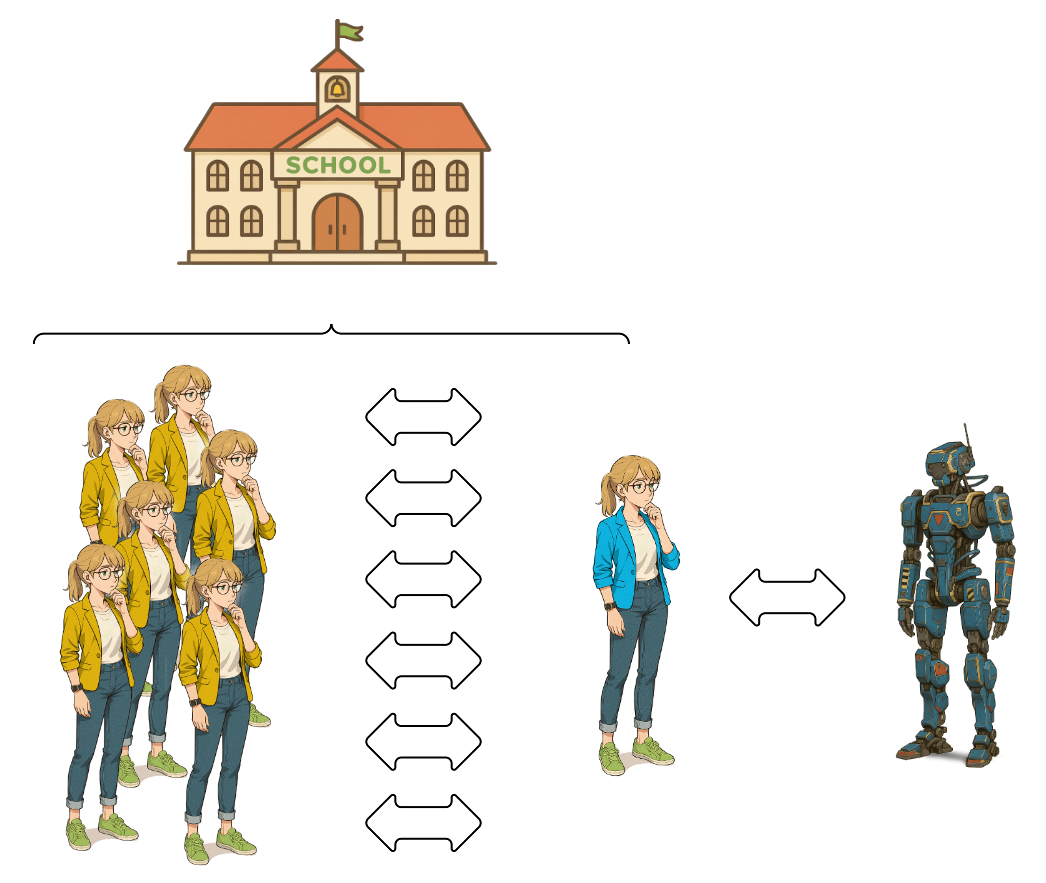

Even though it doesn't work, the organisation will roll this out to all the schools. Each school becomes a silo, with a choke point and many individual silo's. All bashing away at Gemini to get a lesson plan on Vikings.

Oh, and education is state-based, so each state will have to develop its own variation of this failure. Why fail once when you can fail eight times.

You now have institutionalised failure at scale. Winning!

So what's the alternative?

Here's where I drag in the software developers and things get a little technical.

Before I explain the how, I want to establish a timeline. Because the time involved is a big part of AI's value prop and the damage it will cause.

At around 08:00 I decided to post about AI and lesson plans.

I got ChatGPT to help me create the first prompt. Then I realised that to do a decent job of a demo, I would need numerous prompts. Some that needed data that was returned from the prior ones.

This would take a lot of time and I couldn't justify the effort. I was aiming to give an example, not spend days writing / using prompts.

Code was the obvious answer. It could use the prompts in a pipeline of prompt>response loops. But I would still need all the prompts, and the code.

Now I'll be fucked if I'm going to spend days writing code, to make prompts, to make a demo without being paid. So I let AI do all that while I had some coffee and worked on my actual project. AI conveniently keeps a log, so timing is easy to track.

Here's what that timeline looks like:

08:00 I've got an idea💡

08:14 Created a folder and initialised a git repo (source code control). Completed setting up some config guff

08:20 Asked ChatGPT to write me a lesson plan for a Year 9 History class on Vikings.

It was ok, but the output included tasks to do things like “create slides”. I don't want to do that, I want AI to make everything, not give me more work.

08:30 Wrote a quick and dirty document explaining what I required and pasted in the first prompt as an example. I specified that it must align with guidance from the NSW Department of Education, found here: https://curriculum.nsw.edu.au/learning-areas/hsie/history-7-10-2024/overview 08:36:50.345000

I opened up Claude Code and asked it to rewrite my requirements as a comprehensive document that can be used to create a solution.

08:37:08.370000 Documentation done. It created 3 files, with 553 lines of text.

08:51:52.716000 Asked Claude to create a detailed list of tasks that would be needed to complete the solution. It should write the tasks as issues in GitHub (Think helpdesk tickets)

09:31:13.723000 Claude had created 37 tasks in GitHub Issue tracker. I asked it to complete all the tasks.

10:54:05.366000 Claude had completed the code, tested it and generated my lesson plan on Vikings.

That's:

- 553 lines of solution design

- 37 Issues created

- 646 lines of code written

- 562 lines of documentation

- 37 Issues commented and checked off

- 18-page lesson plan on Vikings

2 Hours and 20 minutes from idea to working solution. That timeline is only possible with AI.

A better model

The thing is, when it comes to AI, what you need to focus on is the end goal. The goal is not to enable teachers to use AI. It's to create lesson plans, and the AI doesn't need teachers for that.

It sure as hell doesn't need thousands and thousands of teachers to each repeat the same request for the same lesson plans.

So here's a better model.

- Take a group of teachers and software developers.

- Group them together as a working unit, without distractions

- Don't engage with other teachers, government bodies, industry interests or stakeholders.

- Create a code-driven solution that provides a custom AI service for creating lesson plans. For the techs reading this, it would be a multi-agent solution that is connected to existing data sources such as government guidance, multiple LLM's and Google Classroom.

How do teachers get those lesson plans? They don't.

A teacher or administrator:

- Books a meeting in Google calendar for say, next week

- In the calendar entry, write “I need a lesson plan for my History class about Vikings”

- Invite LessonBot

- Invite the students

LessonBot generates:

- Customised lesson plans for 27.4 students. Each plan addressed to them personally and customised to any specific learning requirements they have.

- Saves the data into each student Google Drive and an educator version into the teacher's drive.

- Sends an email to everyone letting them know the plan is there.

Done.

So why is this a bad thing?

The solution I built to make lesson plans is not production quality. It's dirty code and it doesn't produce remarkable results. The entire thing would need to be improved dramatically before you would want to put this to use in a school.

The problem, is the almost complete lack of time, materials and skills required to create this solution. AI completed something that should have taken weeks, in hours. Getting to production quality would take more time, but far shorter than without AI.

Developers are x% redundant

Almost no software development time and skills were needed. It took 3ish hours total, but I was only needed for about 30 minutes. I wrote zero code. Just babysat the AI and answered its questions.

Most of the developer time is now redundant. Which means most of the developers are redundant. Your job is now feeding AI requests, validating the output, and doing anything the AI needs you to do for it.

Teachers are x% redundant

This solution can generate any amount of lesson plans, and it can do it in under 3 minutes. It doesn't have to be about Vikings, by the way. It will make one for any subject.

A teacher is no longer required to create lesson plans, which means x% of their time is freed up.

They will fill that time with other work of course, but then another task will be taken by AI. Then another, and another. Before you know it, enough teacher time is freed up that 1 in 20 or 1 in 10 teachers can be moved to part-time. Then eventually removed entirely.

If Only

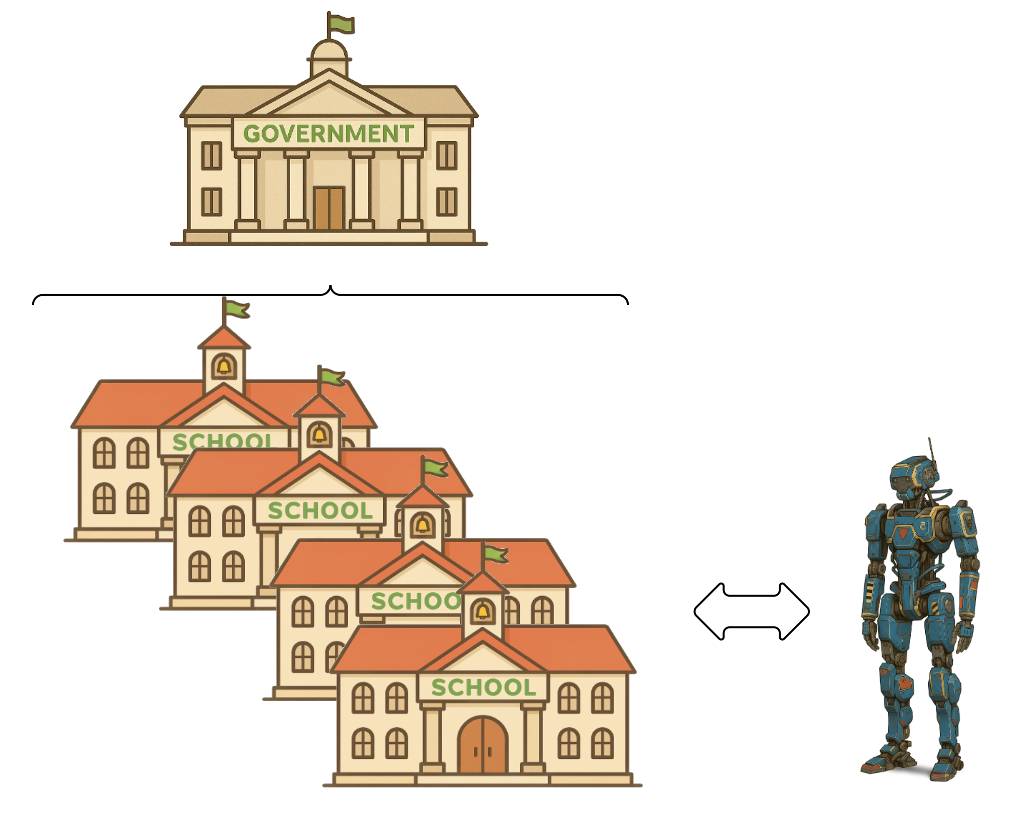

Of course, no state government is going to do that alternative model. It's an antipattern so foreign they wouldn't even be able to consider it. I doubt private education could carry this out either.

Eight Australian state/territory governments will forge on with the old model they know, until it's infeasible. Then they will:

- Seek industry and community input

- Put out a tender or two or three

- Burn 10's of millions repeating each other

- Fail

That won't matter very much, though because that failure will take time. Far more time than they have.

While they fuck about, companies like Google, OpenAI and Anthropic will forge on. They won't be limited by governance or ethics comities or fears of social impact.

By the time a state government can advertise a tender, AI will be a generation older.

By the time the vendor has failed and is asking for more money, AI will be so advanced the original concept of LessonBot won't be required.

AI is amazing

AI is amazing, I love using it, I love the power it can place at my fingertips to create things I would never have thought possible. Not just code, but images, audio or just explaining/teaching me things.

I've spent hours talking to ChatGPT about topics ranging from coding best practices to organic chemistry. AI is the best teacher I never had. It never tires of my stupid questions, and it can cover just about any topic, no matter how obscure.

AI finally answered my teenage self's question of “What is even the point of quadratic equation's?”. An answer my overworked and stressed maths teacher never had time for.

But

AI consumes human labour in ever-diminishing increments, like Ouroboros devouring its own tail. It's a loop of self-erasure until there's nothing left to automate.

AI makes our purpose redundant. It will erase any and every knowledge-worker, artistic or content generation role until there is nothing left.

This is the entire point of AI. AI removes human labour, while preserving the product. It retains the profit, while removing the cost.

So yeah, it's amazing; but we're so fucked.