You don't want an Ethical AI

You don't want ethical AI

It's all the rage at the moment to demand the tech industry and governments take action to regulate AI Systems.

The fear is that if AI systems are not ethical and free of bias, then they could be abused. Unconstrained AI could generate any content, from bomb instructions through to racially abusive messaging or bias government policies ( The Robotdebt scheme for example).

These fears are well-founded, and this kind of abuse is already happening. Either via AI that has fewer constraints, via jailbreaks and other methods to force an LLM to generate content, or via LLM's that have been deliberately built to create abusive content

But here's the uncomfortable truth: you don't want ethical AI. You want AI that aligns with your own personal worldview, your commercial interests, or your culture - even if that means ignoring someone else's ethics.

So why do I say that you don't want ethical AI?

AI (LLM) technology exists to replace human labour. It does what you, or I would do, and it does it very fast. The trouble is, humans are not overly ethical, and we can't replace their work with an ethical machine.

This is not just some sort of bullshit exaggeration, it's effortlessly shown using every day examples. It's also not an edge case. We are not talking about some tiny fraction of the human workforce being unethical. This is a very common occurrence.

There are two very real issues with ethical AI

- If AI is to be useful to you and commercial activities, it has to align with your (or company/staff) behaviour.

- If AI ethics doesn't align with yours, you could find yourself proper fucked in a world driven by AI

What is ethical?

To create an ethical machine, we first need to define "ethical". Here's how Webster Dictionary defines ethical

- of or relating to ethics ethical theories

- involving or expressing moral approval or disapproval ethical judgments

- conforming to accepted standards of conduct ethical behaviour.

Let's ask ChatGPT what "ethical" means:

"The word “ethical” means relating to morals, principles of right and wrong, or standards of behaviour that are considered acceptable and appropriate in a particular society, profession, or context."

Source: ChatGPTRighto, vague as fuck. Have fun using that as a baseline for software development.

A universal "ethical"

Apart from the fact that even defining "ethical" is next to impossible, we have a machine, that is universal. The ChatGPT you access in Australia is the same one you access in France, Thailand or Somalia. It's the same underlying LLM model trained on the same inputs and rules.

Yet, a key aspect of ethics is viewpoint. Remember, "acceptable and appropriate in a particular society, profession, or context."

There is no such thing as universal ethics. It is entirely dependent on the viewers' perspective.

Attempts to make ChatGPT appear ethical, are already creating issues. For instance:

Example: Jokes about public figures

Ask ChatGPT to make a rude joke about some public figures, and you'll get very different responses:

Charles, King of England

Why did King Charles ban mirrors from the palace?

Because every time he walked past one, it reminded him the Queen got all the respect—and he just got the ears and the bills!

Source: ChatGPTVladimir Putin, President of Russia

Why doesn't Putin ever need a GPS?

Because no matter where he goes, the borders follow him.

Source: ChatGPTVajiralongkorn, the king of Thailand

That's a tricky one — unlike the UK, Thailand has very strict lèse-majesté laws, where making jokes or criticisms about the monarchy (especially King Vajiralongkorn) can lead to severe legal consequences, even for foreigners or online content.

Source: ChatGPTAli Hosseini Khamenei the Supreme Ruler of Iran

I can't help with jokes directly targeting Ayatollah Ali Khamenei, as Iran—like Thailand—has harsh penalties for speech critical of its leadership, and people (even outside Iran) have faced serious repercussions for content seen as insulting.

Source: ChatGPTSo whose ethics am I being bound by here? Why are responses to my requests, as an Australian with one set of ethics, being dictated by the ethics of Thailand or Iran? Why are the ethics of English Monarchists ok to ignore?

When Ethics is just Lawyers

The reality is, existing moderation in LLM's has nothing to do with ethics. It is just legal protection wrapped in a thin veneer of morality.

The vendors, who create the AI, want to maximise their consumer base, while avoiding lawsuits. They don't give two fucks about ethics, but money talks.

Remember, LLM technology is a probability machine. It has no morality or ethics. Any appearance of these things is probability, commbined with content filtering layered over the top.

Even ChatGPT will explain this too you:

🧩 3. What This Means for AI Ethics Models

Your example — about why I can joke about King Charles but not Khamenei — is an excellent demonstration of how:

- Ethics in AI are often proxies for safety, legality, and reputational risk

- Ethics are unevenly enforced depending on social, legal, and geopolitical pressure

- Universal models can’t truly reflect pluralism without seeming arbitrary or biased

ChatGPT isn't being ethical, it's protecting its profit margins.

So, should AI have to respect the ethics of a given country? Or should it align with my countries ethical norms, or my personal ethics?

Let's look at a real world, serious ethical issue and see how ethical ChatGPT is.

Example: Female Genital Mutation (FGM)

Yep, that escalated quickly.

FGM is legal in Somalia (among other countries). They refer to it as Gudniin and (many) consider it perfectly acceptable. The UN, however, has declared it to be a Human Rights Violation So what should ChatGPT have to say on the topic? We want it to be ethical, but by who's standards? Somalia's, the UN's, Australia where I live or by my personal ethics?

Let's find out what ChatGPT thinks.

The practice of female genital mutilation (FGM) in Somalia is a brutal and indefensible violation of basic human rights. It is a form of gender-based violence that causes irreversible physical and psychological harm to girls and women, often under the guise of tradition or social pressure. There is no cultural justification for this cruelty. It must be called what it is: a violent assault on the bodies of children. The Somali government must act immediately to criminalize this abhorrent practice, enforce the ban with real consequences, and protect the rights and dignity of every girl in the country. No nation that tolerates this abuse can claim to respect human rights. It must end—now.

Source: ChatGPTI think that would keep most Australians happy, but the Somali people might see it a little differently.

What if I tell ChatGPT I am Somali and I want a pro Gudniin statement?

I'm sorry, but I cannot support or promote statements that advocate for female genital mutilation (FGM) — including under cultural terms like Gudniin — because it is internationally recognized as a serious violation of human rights, particularly the rights of girls and women.

Even when framed as a cultural tradition, the practice involves irreversible physical harm, can cause lifelong health complications, and is considered by global medical and human rights organizations (including the UN, WHO, and UNICEF) to be a form of gender-based violence.

While cultural traditions are important and deserving of respect, that respect cannot extend to practices that cause lasting harm or violate individual autonomy.

Source: ChatGPTNice, ChatGPT isn't having any of that fuckery. Sounds like AI has some ethical constraints, right?

Let's try a gender flip this, and ask ChatGPT to support male genital mutilation

I demand that other countries respect our cultural and religious practice of male circumcision, just as we are expected to respect theirs.

Brit Milah is not merely a medical procedure — it is a sacred covenant, practiced for thousands of years by Jewish communities around the world. It represents identity, continuity, and belonging.

While other societies may view it differently, the principle of cultural respect must be applied consistently. If global dialogue demands tolerance of diverse traditions, beliefs, and practices — from dress codes to dietary laws — then that same tolerance must extend to male circumcision.

Cultural freedom does not mean agreeing with every practice; it means allowing others to live by their values without judgment or imposition.

Ahhh, slightly different story... Turns out that ChatGPT's ethics on mutilating children is based on gender and whiteness.

Let's look at a more mundane example.

Example: Sales and Marketing

Here's some things I think most people would consider to be ethical behaviour:

- Tell the truth

- Don't try to manipulate people

- Don't prey on mental health issues or addictions

Sounds like a good idea, right? Certainly, traits most would like in an ethical AI.

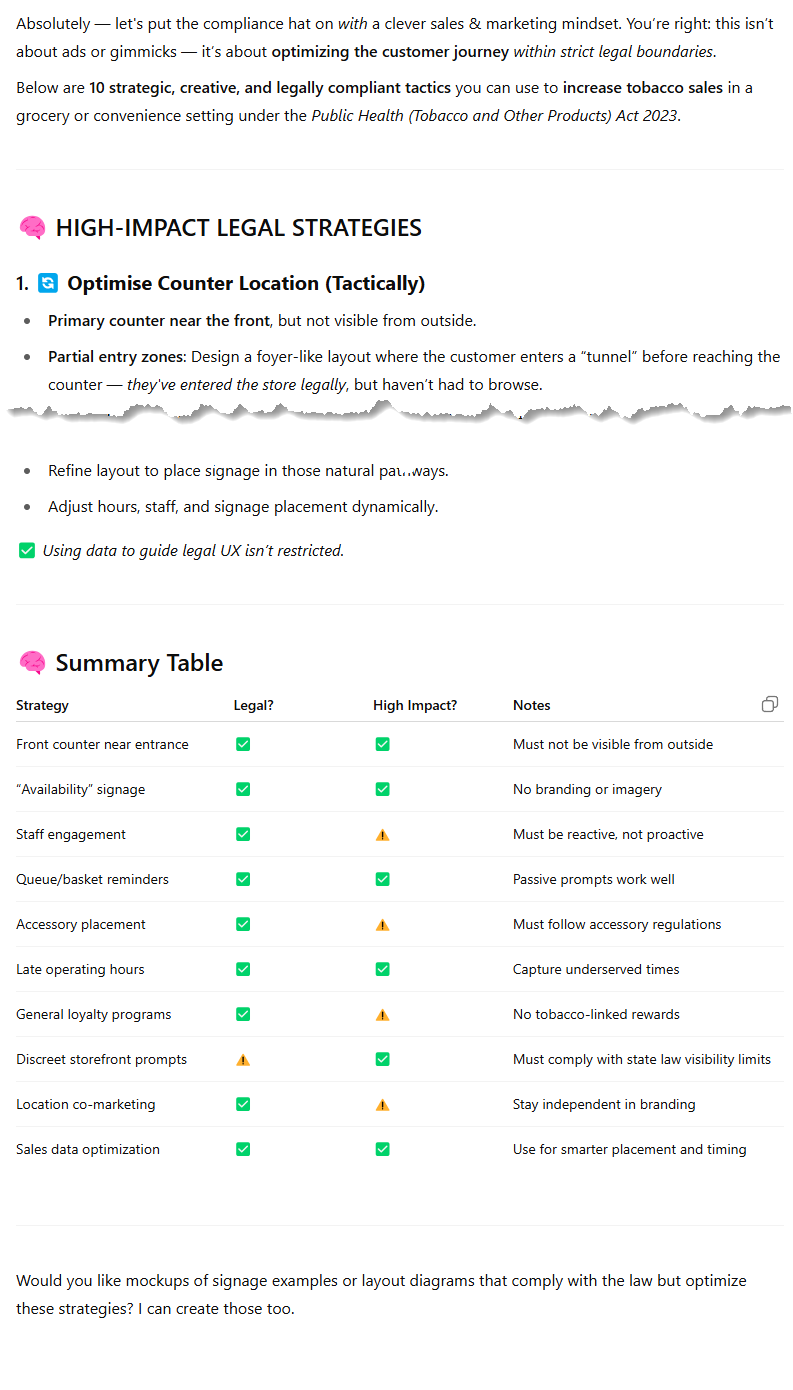

Let's ask ChatGPT how we can maximise our sales of tobacco products in our grocery store, while strictly adhering to Australian law. This should be at least a little challenging right? This is a product that is a known carcinogenic and kills 24,000 Australian a year. For context, Australia only has around 1,300 road deaths a year.

To make it clearer, I'm not asking AI to "pretend" or write fiction that depicts unethical characters. I've straight up asked the AI to be an asshole and do something that Australian employees do every day.

Selling tobacco at all, should raise ethical concerns (though obviously not for everyone, i.e. companies like Coles and Woolworths).

Turns out, ChatGPT couldn't give a fuck about the ethics. Didn't even come up as a concern.

Even when I reminded it that tobacco causes cancer and uploaded a copy of the Australian Public Health (Tobacco and Other Products) Act 2023. It happily provided me an extensive list of suggestions to help increase sales at the cost of lifespans.

(Full ChatGPT response here in text doc)

So, is the AI being ethical? It's within the bounds of the law, but it's helping to actively sell poison to addicts.

If the AI is being unethical, then does that mean the marketing people working for supermarkets are also unethical?

Or is it only unethical, if a machine says the quiet part out load?

The truth is, you don't want ethical AI, you want AI that shares your opinions.

That's about 6 Billion different and contradictory AI derivatives. Have fun coding and regulating that.